The new fraud frontiers

Feature: DEEP DIVES INTO A CHANGING WORLD

The message on the clerk’s screen looked suspicious. It was purportedly from the Chief Financial Officer of the Hong Kong-based financial services firm where she worked, and it was asking her to make a series of confidential payments. Surely this wasn’t legitimate? The colleague offered a video call to discuss it all properly. Having accepted the invitation, the clerk found herself in a meeting with the CFO plus a number of other senior people she trusted. Doubts were assuaged. The money was sent.

In all, the clerk transferred $25 million via 15 separate payments. Only then did she contact the company’s head office and realize she had made a terrible mistake. The whole thing had actually been an elaborate scam.

This happened at the start of 2024, as generative AI was making breakthrough leaps in video capabilities. Using the technology, fraudsters had managed to replicate a conference environment, and the colleagues supposedly on screen were, in reality, nothing more than digital avatars. Their appearance, voices, and mannerisms mimicked their real-life counterparts, and they were sufficiently convincing that the worker was duped.

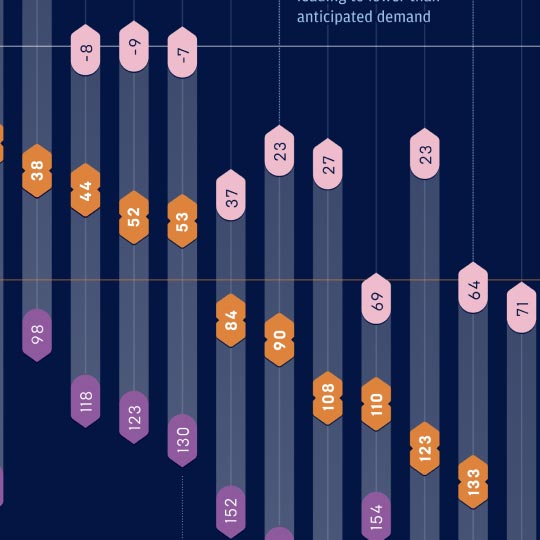

Fraud is a significant problem for business, and—clearly—it’s becoming more sophisticated. Merchant losses due to online payment fraud are expected to soar to $362 billion globally by 20281. For every dollar taken by fraudsters, the total cost to organizations is on average approximately three times the transaction value2. That’s because merchants may incur fees and fines or have to replace stolen goods.

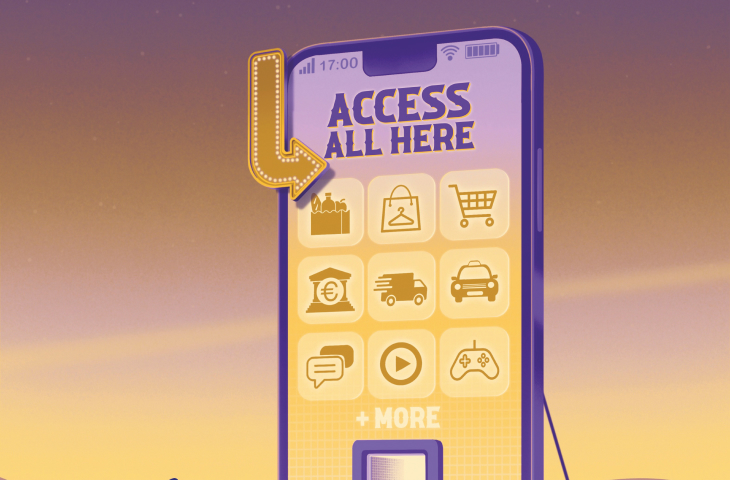

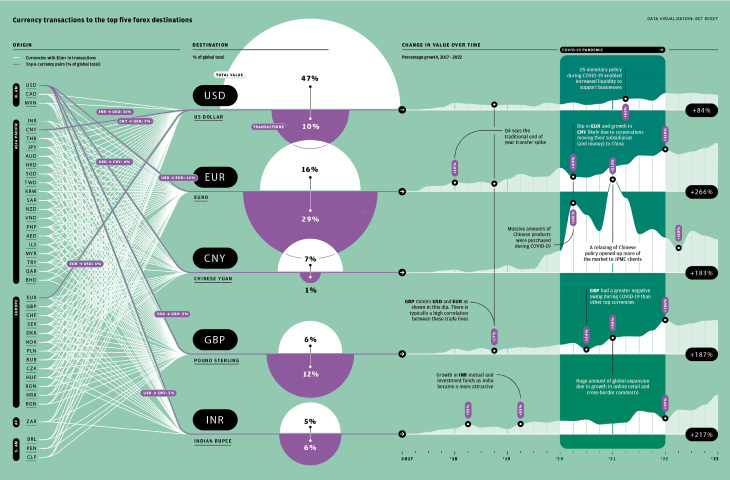

As the world digitalizes, criminals have more opportunities to strike. More than $10 trillion worth of digital transactions took place worldwide in 2023. By 2028, that figure is expected to rise by more than 60 percent, as consumers and businesses continue their shift from cash to online and mobile payments. This improves convenience, but adds vulnerability. Digital channels presently account for more than half of overall fraud losses3. The risks are exacerbated by novel behavior. There is demand for greater choice in payments, from digital wallets and microloans to “buy now, pay later”, pay-by-bank and real-time rails. These new services and tools, and the need for organizations to adapt to them, create new areas of exposure for businesses that offer them.

Fraud and its enablers take a wide variety of forms, from social engineering and malware to identity theft and “card not present” scams. Innovations, such as deepfakes, let fraudsters upgrade their methods, opening up new angles of attack or enabling traditional schemes to be performed with greater ease, effectiveness and—crucially—scale. A dark web product called FraudGPT, for example, is a generative AI tool that writes convincing phishing emails and malicious code.

So what can businesses do to keep themselves and their customers secure? Thankfully, just as technology is a boon to criminals, it is also providing organizations with ways to stay on the front foot and shut those threats down.

Here we explore four hot topics in the fight against fraud...

In the late 19th century, the French police officer Alphonse Bertillon had a radical idea: What if, rather than relying on imprecise techniques of interrogation and investigation, law enforcement could identify criminals through unique physical characteristics? Although flawed, “Bertillonage” laid the foundation for fingerprint identification, and thus the modern science of biometrics4.

The ubiquity of smartphones with high-quality cameras and touch sensors, combined with advances in computing power and artificial intelligence, mean that biometrics are now an everyday part of the user experience for countless businesses. From logging into an account to completing a payment, users can verify interactions via their unique biological markers5. The field of biometrics is evolving fast, and its cutting edge now encompasses everything from facial and voice ID to palm recognition and long-range iris scans.

The strength of biometrics as a security measure, however, has paradoxically created a blind spot for businesses. When a biometric test is passed, it is tempting to accept that at face value. But as we have seen, generative AI can produce convincing deepfakes, and these can fool facial and voice checks—even ones enhanced to detect signs of “liveness”. Breaking biometric security can allow fraudsters to commit identity theft, onboard to other products such as authenticator apps, or simply carry out fraudulent transactions.

Techniques to help businesses combat deepfakes are emerging. One is the use of residual neural networks, which are deep machine learning models that have an aptitude for detecting falsified elements on images and videos. Another involves “challenges” that aim to expose deepfakes by exploiting the limitations of the models behind them. Nasir Memon, the Dean of Computer Science at New York University (NYU) Shanghai, has created a tool, as yet largely untested, that involves cascading challenges. These can be active (requiring the participant to do something such as poking their cheek, say, or holding an object in front of their face), or passive (such as changing the scene illumination by projecting structured light patterns onto the subject). Deepfakes can struggle to pass such tests. “If I don’t know whether you’re a deepfake, I can probe you in some way, send some data in your direction and see how things come back,” he says. Ideas like this can not only help prevent biometric failures, but could also thwart the kind of social engineering attacks that led to the Hong Kong fraud.

An alternative avenue of research focuses on using secondary factors to support the biometric test. This might involve inspecting aspects of the input for consistency. If the facial scan purportedly comes from a phone whose camera offers a particular resolution, for example, it would clearly be a red flag if the system was looking at a face with a different resolution. Another possibility is to involve secret knowledge; for example a voice-based system might ask the user to say a phrase that only they know.

Since the ongoing evolution of technology threatens to make many anti-deepfake techniques almost obsolete as soon as they see the light of day, that kind of holistic thinking is essential. “Deepfakes are a greater concern when a business is only assessing a limited number of factors for risk. Good fraud detection should involve assessing a bunch of different factors in aggregate,” says Mike Frost, Senior Product Specialist at J.P. Morgan. He also cites changing payment instructions, use of a new phone number, a change of device or an account being accessed at atypical times of the day as examples of factors that can be evaluated to help build a detailed picture of suspicious activity.

“The tactics that we’ve tried to use are less related to technology and more about practices,” agrees his colleague Steven Bufferd, Managing Director of Trust & Safety Products at J.P. Morgan. “How do we validate it? Are there sources we can trust? Is the change of payment instruction legitimate? Is that a real person? Can we validate based on the information you’ve given us? Trying to ascertain whether a person is real should not just be a technological endeavor, but one in which technology assists and augments human-based best practices.”

In the mid-1960s, MIT Professor Fernando Corbató had an idea. He had built a computer designed to be used by multiple people. Each user would have their own private files, so needed their own account. How to protect that account? Simple: A password.

Corbató is widely credited as the inventor of the computer password, and his creation is still with us today. When a business signs up a new user, they typically secure that account with a password. It’s the default, time-tested digital security tool, and it has persisted for a reason. It’s straightforward, cost-efficient, and can be easily changed.

Passwords are also insecure. Around 70 to 80 percent of online data breaches are the result of password theft, according to Andrew Shikiar CEO of FIDO Alliance (FIDO), an industry association focused on reducing online fraud. Fraudsters have an array of ways to steal passwords, such as deepfake voice calls, phishing emails, or mobile interface attacks, in which the display and touch input of the victim’s phone are revealed to the criminal. Too often, individuals and organizations use one password for multiple services, so one breach can lead to further compromises6. Advances in technology only heighten the risks. Generative AI is adept at writing code, for example, making it easier to create password-stealing malware that’s hard to detect. “The fundamental issue is that we’ve relied on knowledge-based authentication for too long,” says Shikiar. “There are also major security and usability issues. I think we can all relate to how challenging passwords can be to utilize effectively.”

The downsides for businesses are pronounced. If a fraudster obtains a user’s password, they might have an unchecked ability to make card-on-file payments or use the account as a springboard for committing further fraudulent acts. In an effort to upgrade password security, many businesses are demanding that users recall increasingly complex combinations of words, symbols, and numbers to access their accounts. That, too has a trade-off, as it adds friction to the user experience.

No wonder technologists have long wanted to kill passwords. But no viable alternative has presented itself—at least, not until recently. The answer, according to FIDO, is passkeys.

A passkey is a unique alphanumeric key stored on a user’s device (such as a phone, laptop, or tablet). When a user tries to log in to an account, the account-specific private key combines with a counterpart public key stored on the app or website server. If the two keys are cryptographically proven to be a pair, the user can access their account. The promise is twofold: Since the key is not stored externally, it is not vulnerable to data breaches; because it can only be used on the device on which it was created, it can’t be used elsewhere anyway7. A further upshot is that if a company does not have to store sensitive password data, it reduces business risks, including the possibility of reputational harm. Passkeys aren’t just more secure: The process of using them can also be 40 percent faster8, allowing businesses to improve the seamlessness of their user experiences. More than 100 organizations have already adopted passkeys as a sign-in option, including e-commerce companies, fintechs, and the UK’s National Health Service9.

However, while passwords might be less secure and less convenient for many users, they may be hard to shake. FIDO says that just 39 percent of people it surveyed are familiar with passkeys10. “People might not love passwords, but they know how to use them,” says Shikiar. “Passkeys are a new concept and people need to become familiar with it.” Shikiar points to the growing use of biometrics as an example of how consumer behavior changes as people become more comfortable with technology.

And it’s important to note that passkeys also have their downsides. It’s harder to sync them across devices, and there are still relatively few apps and websites that support the technology. It can also be a convoluted process to recover access to an account if the user loses all their devices. “You need to talk about what demographics and areas this is likely to start in. It’s going to be younger people that are more comfortable with different technology,” says Frost from J.P. Morgan. “And there are going to be certain industries, users, and workloads where there is a business incentive to do away with passwords.”

Data breaches are expensive. When a security violation leads to the unauthorized usage or disclosure of confidential data, the average cost to an organization is now $4.45 million. That’s a record high—and more than 15 percent greater than the average cost in 202011.

Data breaches often begin with an act of deception. Fraudsters typically socially engineer employees to open a malicious file or hand over their credentials. The ways that modern businesses operate create extra vulnerabilities. Enterprise computing set-ups often involve cloud environments, which means data can be accessed by more people across a much broader potential “attack surface”. That attack surface can also include supply chain partners, and supply chain vendors are a prominent source of breaches12. The professionalization of cybercrime—combined with new hacking automation tools such as XXXGPT—make it more likely that criminals will take advantage in order to steal identities or carry out payments fraud.

To protect data, businesses typically encrypt it. But data then has to be decrypted before it can be used—including for the detection of fraud itself—at which point it potentially becomes vulnerable. Enter homomorphic encryption (HE), which has its origins at the Massachusetts Institute of Technology (MIT) in 1978. HE enables computation to be performed on data while it remains encrypted.

Vinod Vaikuntanathan, an MIT Professor of Electrical Engineering and Computer Science, who is the co-inventor of most modern fully homomorphic encryption systems explains the concept as follow: “You can think of traditional encryption as putting your data inside a locked box. You send that locked box over a channel and the person at the other end has a key. They can unlock the box and get the answer,” he says. “Homomorphic encryption, on a basic level, is being able to take a locked box and manipulate the contents within without opening it up.”

While this has obvious advantages for data security—businesses could ask a third-party provider of powerful cloud computers to work on data without introducing risk, for example—there is a specific use case for HE in detecting fraud, because HE has the potential to facilitate safe data sharing. In financial services, multiple entities could securely work together to identify suspicious activity. “If you think of two or more banks, they each have their own data about their customers’ transactions. But fraud often operates across many banks, often with small transactions that flow across multiple accounts,” says Vaikuntanathan. “It’s very hard to detect, if your visibility is limited to your own network. So, can we enable people to compute across all that data, and identify suspicious operations? That is something that is being worked on.”

HE is not yet a widespread technology. It’s secure, and simple computations often take just seconds to execute, but when extrapolated to large and complex datasets, especially when paired with equally complex algorithms, there is a significant computational “overhead.” This makes scale implementations often impractical in a real-world financial setting13. However, this may change. “That will either happen through finding ways to rewrite your computations so they’re more amenable to homomorphic approaches, which could be assisted by machine learning or via better hardware. There is lots happening with hardware—although unlike with AI, it’s not the compute that’s the bottleneck, it’s memory.”

If the problems can be solved, HE has an opportunity to proliferate—perhaps to the stage where we never need to decrypt encrypted data. “I think we have a reasonable shot of making it in, let’s say, a decade,” says Vaikuntanathan. “I’m putting all my time and energy where my conviction is, which is in inventing new techniques to make this faster.”

Artificial intelligence is giving fraudsters an array of new tools that allow them to increase the scale, scope, and sophistication of their activities. But AI is also a crucial way of tackling the threat. The challenge is that machine learning depends on historical data, and this is often not available for newer forms of fraudulent behavior, leaving businesses on the back foot.

One use case of generative AI, in particular, is that it can be deployed to produce synthetic data, which can help address this problem. Synthetic data is an imitation of a real dataset that can be used to train AI models. It’s cheap, can be produced on-demand, and can be designed to include few biases14. Another upshot is it can be used to model less commonplace, edge-case behavior. A payments company, for example, could use synthetic data to build systems that can identify merely hypothesized or forecasted examples of suspicious activity. “If you have a ML model that is able to learn fast, you can come up with some synthetic scenarios that have never happened before and teach your machine learning model to identify that fraudulent activity,” says Blanka Horvath, Associate Professor in Mathematical and Computational Finance at the University of Oxford. That might be particularly useful in the context of new technologies, such as real-time payments, where relatively little historical data exists.

Synthetic data can be applied to a range of scenarios within the payments sector, from identifying money-laundering behavior, to detecting suspicious patterns in payments records, customer journeys, or transaction histories15 16. One particular advantage for financial services is that it can sometimes be impossible to use real data even when it’s available, owing to data privacy regulations.

Synthetic data is not a panacea. It is challenging for businesses to validate whether a synthetic data set is effective, at least in the short term. Is it flagging too much legitimate activity as fraud? How effective is it at identifying anomalies?

There are a number of ways to bridge the real-synthetic gap. Businesses can benchmark the outcomes of synthetic models against those of comparable real-world ones; they can use “adversarial training” to improve the verisimilitude of synthetic data; and they can fine-tune synthetic models on real-world data. It’s likely that augmenting real-world data with synthetic data—with the latter being trained on and modeled against the former—will be especially important to the payments industry.

This configuration can help unearth the broader characteristics of fraudulent transactions. As Steven Bufferd from J.P. Morgan explains: “Our view has been to use internal data and start to model against what we see happening within the real world,” he says. Then it becomes a matter of using signals from that data to “pull information that highlights why transactions were fraudulent.” This data, he says, can be channeled into scoring models that uncover trends and patterns that can help the company proactively nip fraud in the bud.

Tackling fraud is a complex undertaking. The picture is made more complicated still by regulation-related challenges.

The increasingly connected nature of payments is driving more cross-border activity, creating uncertainty about which rules apply where and how. Mike Frost from J.P. Morgan notes the tension between this cross-border flow of payments data and the legal requirements in some jurisdictions that compel companies to only hold and process data domestically. “More governments are mandating that data remain within the borders of a nation,” he says. “Finding ways to leverage that data for accurate fraud detection while complying with these regulations remains a challenge.”

With payments becoming faster, more digital, and geographically broader in scope, both regulators and the payments sector will need to work hand-in-hand to find standards that offer protection without stifling either market competition or consumer choice17. That, says Vincent Meluzio, J.P. Morgan Product Solutions Director, will place increased emphasis on the need to establish strong procedures for managing and monitoring identities and the flow of information.

The need to stay ahead of emerging threats is particularly acute at a time when fraudsters rely less on careful selection and targeting, and more on sheer volume and velocity. So, ultimately, what does a robust fraud-prevention process look like? “An extensive range of validation instruments that create a mosaic of signals, which bring greater clarity to decisions about fraud. Couple that with a strong human element, along with the right kind of automation in the right places,” says Meluzio. “This is what makes the human process of “intelligent exception handling” so key. It’s really difficult for fraudsters to get through a web of multimodal indicators that arm you with greater context to make a decision, supported by a robust process that you follow through with. And an organizational culture that is disciplined enough to stick with the process, even under extreme pressure.”

SOURCES AS PER WIRED, MAY 2024

ILLUSTRATION: STEPHAN SCHMITZ